新智元报道

新智元报道

【新智元导读】DeepSeek开源第二弹如期而至。这一次,他们把MoE训推EP通信库DeepEP开源了,支持FP8专为Hopper GPU设计,低延迟超高速训练推理。

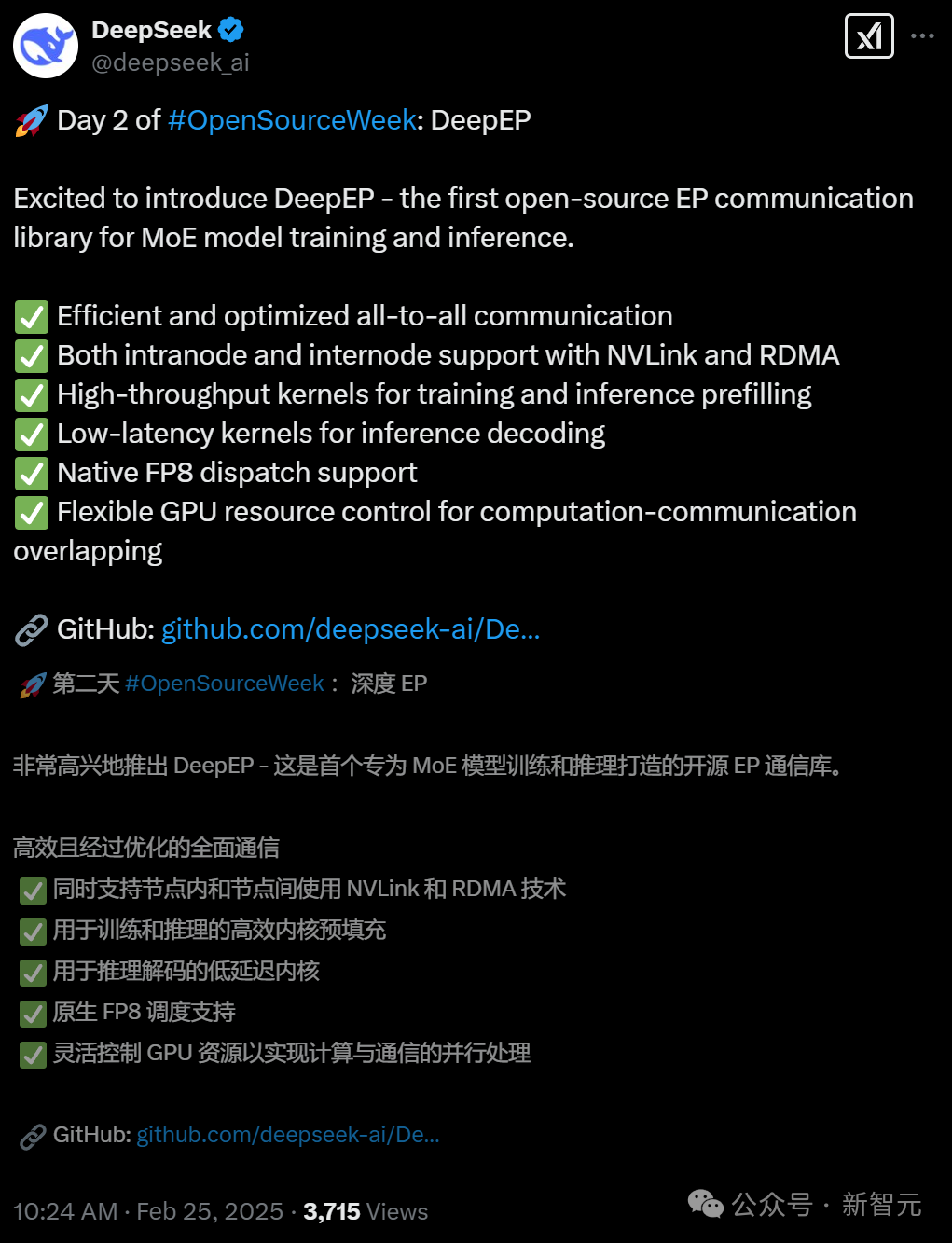

刚刚,DeepSeek放出了开源第二弹——DeepEP!

它拥有高效优化的all-to-all通信,并具有以下特点:

-

内部节点和节点间均支持NVLink和RDMA

-

高吞吐量内核用于训练和推理预填充

-

低延迟推理解码内核

-

本地FP8调度支持

-

可灵活控制的GPU资源,用于计算-通信重叠

具体来说,DeepEP是一个专为混合专家系统(MoE)和专家并行(EP)设计的通信库。

它提供高吞吐量和低延迟的GPU全互联内核,也被称为MoE的「调度」和「组合」操作。该库还支持低精度运算,包括FP8格式。

为了配合DeepSeek-V3论文中提出的群组限制门控算法,DeepEP提供了一系列针对不同网络域之间带宽转发的优化内核,例如将数据从NVLink高速互联域转发到RDMA远程直接内存访问域。

这些内核具有高吞吐量,适用于模型训练和推理预填充(预先计算)任务。此外,它们还支持对流式多处理器(SM)数量的精确控制。

针对对延迟敏感的推理解码任务,DeepEP包含了一组纯RDMA实现的低延迟内核,以最小化延迟。

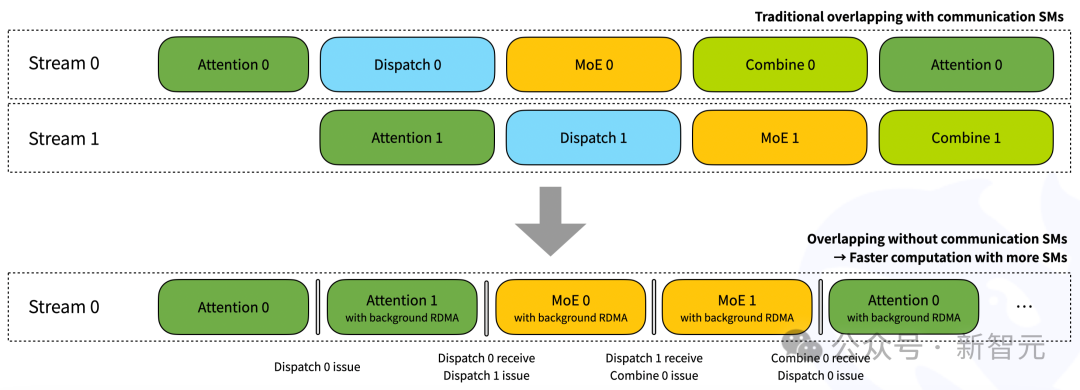

该库还引入了一种基于回调机制的通信-计算重叠方法,这种方法不会占用任何SM资源。

DeepSeek强调:本库中的实现可能与DeepSeek-V3论文有些细微差异。

一位软件工程师激动地表示,「DeepSeek在MoE模型上所达到的优化水平,令人印象深刻,因为MoE模型因其规模和复杂性而广为人知,难度非常大。而DeepEP能够如此精确地处理这些问题,使用像NVLink和RDMA这样的先进硬件,并且支持FP8,真是太牛了」。

还有网友称,这是业界第一款MoE模型训练和推理通信库。

左右滑动查看

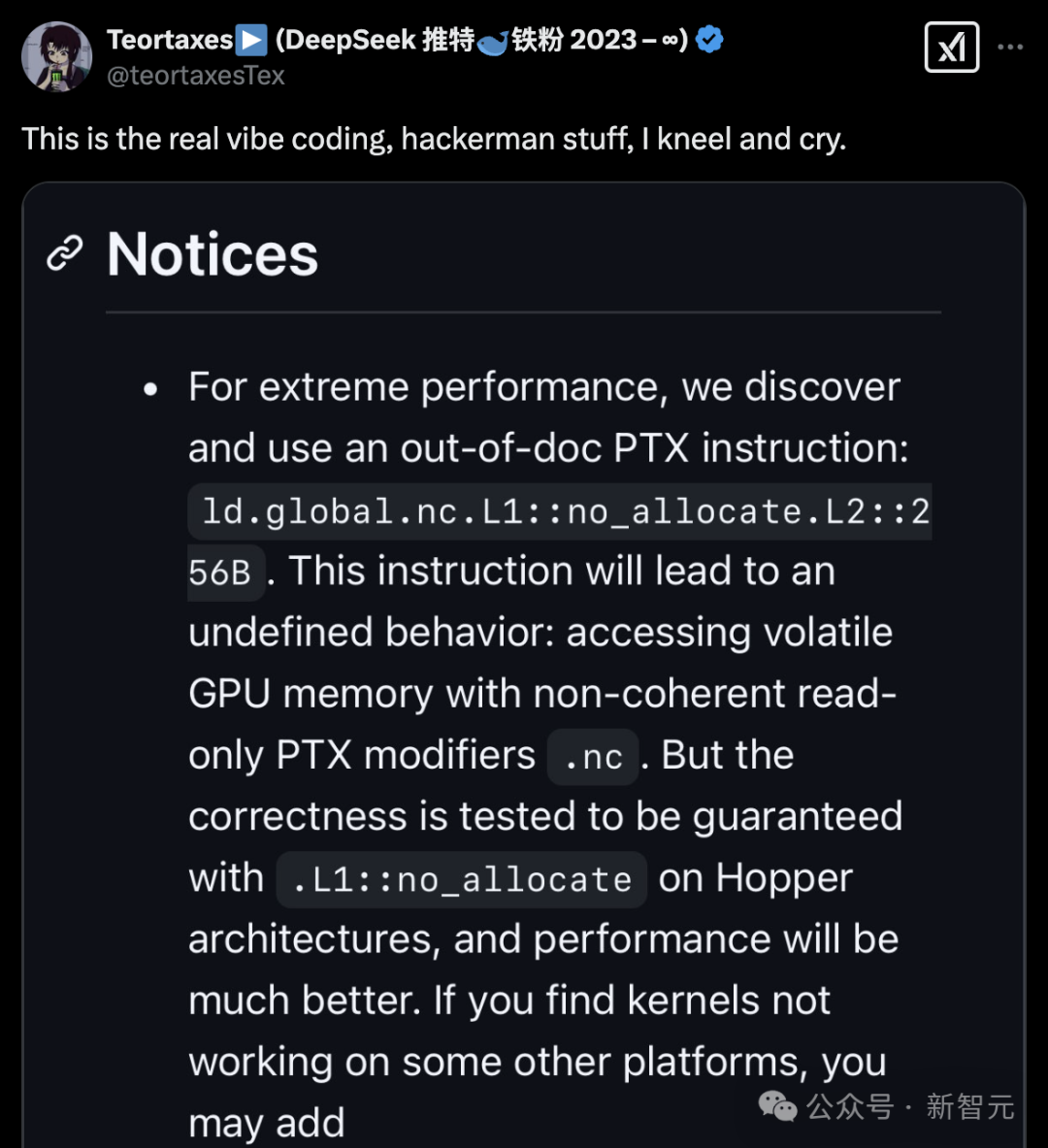

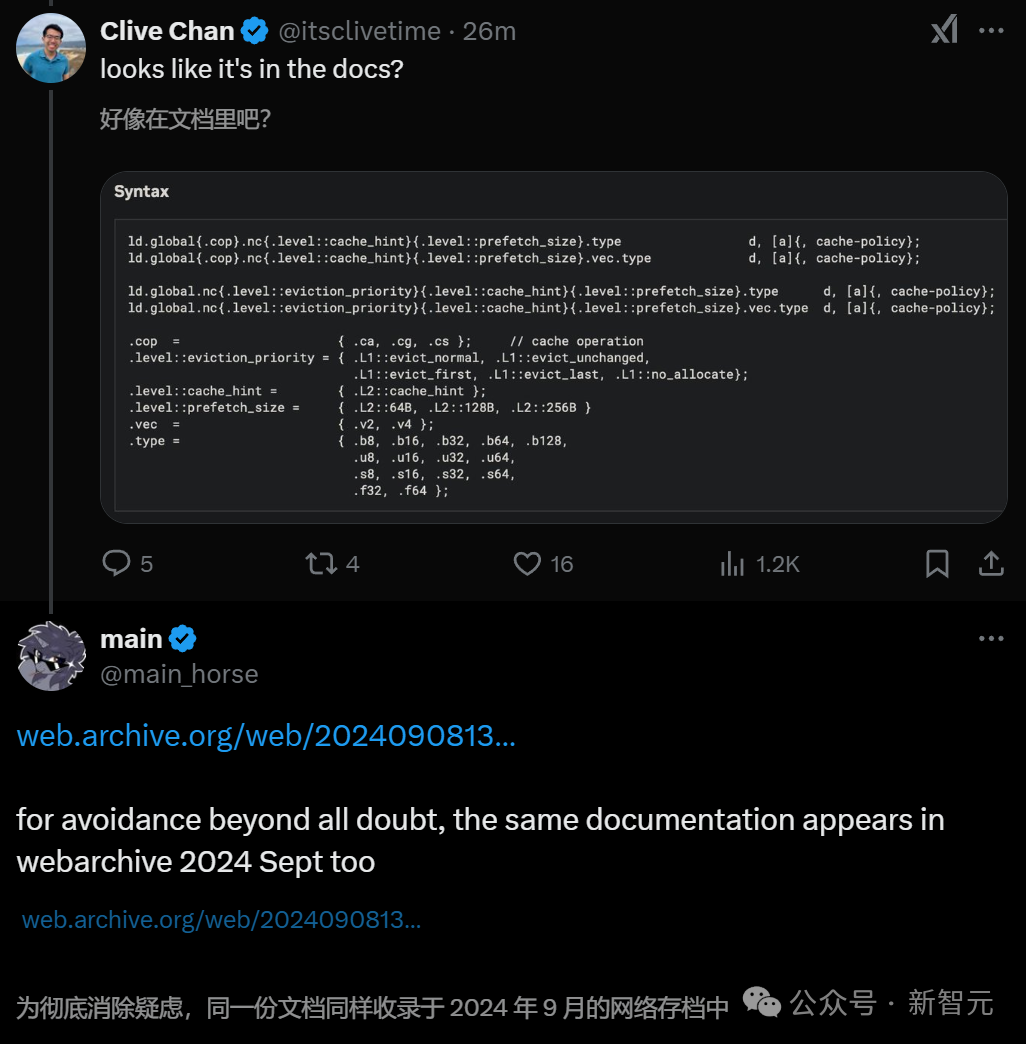

英伟达未列「特殊指令」,被DeepSeek意外挖掘

性能表现

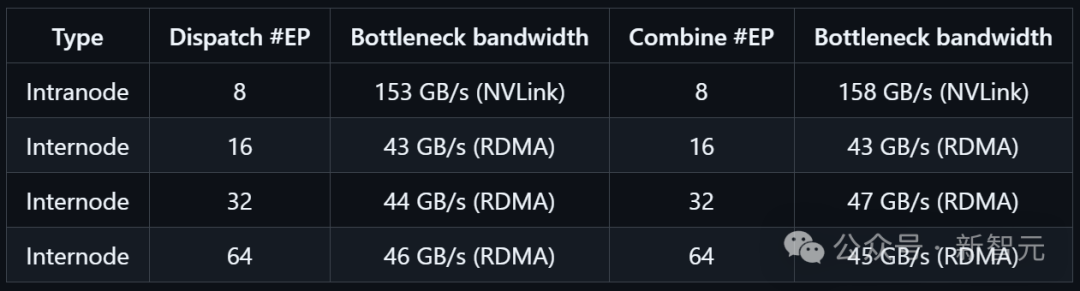

支持NVLink和RDMA转发的普通内核

测试采用DeepSeek-V3/R1预训练配置:每批处理4096个token,隐藏层维度为7168,采用top-k组选择(k=4)和top-k专家选择(k=8),并使用FP8格式进行调度运算,BF16格式进行组合运算。

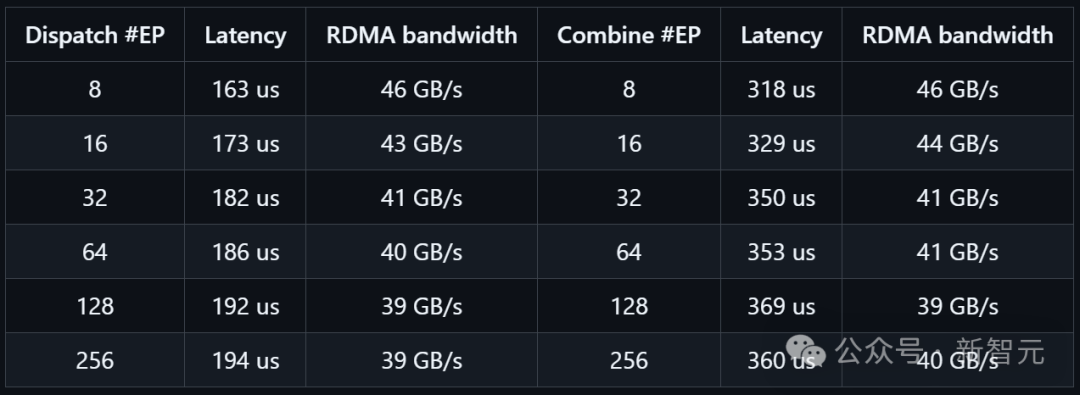

纯RDMA低延迟内核测试

他们使用H800测试低延迟内核,每张显卡均连接CX7 InfiniBand RDMA(远程直接内存访问)网络卡(400 Gb/s,最大带宽可达50 GB/s)。

测试采用典型的DeepSeek-V3/R1生产配置:每批处理128个token,隐藏层维度为7168,采用top-k专家选择(k=8),并使用FP8格式进行调度运算,BF16格式进行组合运算。

快速入门

环境要求

-

英伟达Hopper GPU(未来可能支持更多架构或设备)

-

Python 3.8及以上版本

-

CUDA 12.3及以上版本

-

PyTorch 2.1及以上版本

-

NVLink高速互联技术(用于单机多卡通信)

-

RDMA网络(用于多机分布式通信)

下载并安装NVSHMEM依赖

https://github.com/deepseek-ai/DeepEP/blob/main/third-party/README.md

开发

下面代码片段用于构建并测试一个集成NVSHMEM的Python包:

# Build and make symbolic links for SO filesNVSHMEM_DIR=/path/to/installed/nvshmem python setup.py build# You may modify the specific SO names according to your own platformln -s build/lib.linux-x86_64-cpython-38/deep_ep_cpp.cpython-38-x86_64-linux-gnu.so# Run test cases# NOTES: you may modify the `init_dist` function in `tests/utils.py`# according to your own cluster settings, and launch into multiple nodespython tests/test_intranode.pypython tests/test_internode.pypython tests/test_low_latency.py

NVSHMEM_DIR=/path/to/installed/nvshmem python setup.py install然后,在你的Python项目中导入deep_ep,就可以使用啦!

网络配置

DeepEP已在InfiniBand网络上完成全面测试。理论上,它也兼容融合以太网RDMA(RoCE)。 流量隔离

InfiniBand通过虚拟通道(VL)支持流量隔离。

为防止不同类型流量之间的干扰,团队建议按以下方式将计算任务分配到不同的虚拟通道:

-

使用常规内核的计算任务

-

使用低延迟内核的计算任务

-

其他计算任务

自适应路由

自适应路由是InfiniBand交换机提供的高级路由功能,可以在多个路径间均匀分配流量。

目前,低延迟内核支持自适应路由,而常规内核暂不支持(即将添加支持)。在常规节点间内核上启用自适应路由,可能导致死锁(deadlock)或数据损坏问题。

对于低延迟内核,启用自适应路由可以完全消除由路由冲突引起的网络拥塞,但也会引入额外延迟。

团队建议采用以下配置以获得最佳性能:

-

在网络负载较重的环境中启用自适应路由

-

在网络负载较轻的环境中使用静态路由

拥塞控制(Congestion Control)

由于在生产环境中未观察到明显拥塞,因此禁用了拥塞控制功能。

接口和示例

模型训练或推理预填充示例

常规内核可用于模型训练或推理预填充阶段(预计算阶段,不包含反向传播部分),如下面的示例代码所示。

这段代码实现了一个基于PyTorch的分布式混合专家(MoE)模型的分发与组合功能,支持前向和反向传播的通信与计算重叠优化。

import torchimport torch.distributed as distfrom typing import List, Tuple, Optional, Unionfrom deep_ep import Buffer, EventOverlap# Communication buffer (will allocate at runtime)_buffer: Optional[Buffer] = None# Set the number of SMs to use# NOTES: this is a static variableBuffer.set_num_sms(24)# You may call this function at the framework initializationdef get_buffer(group: dist.ProcessGroup, hidden_bytes: int) -> Buffer:global _buffer# NOTES: you may also replace `get_*_config` with your auto-tuned results via all the testsnum_nvl_bytes, num_rdma_bytes = 0, 0for config in (Buffer.get_dispatch_config(group.size()), Buffer.get_combine_config(group.size())):num_nvl_bytes = max(config.get_nvl_buffer_size_hint(hidden_bytes, group.size()), num_nvl_bytes)num_rdma_bytes = max(config.get_rdma_buffer_size_hint(hidden_bytes, group.size()), num_rdma_bytes)# Allocate a buffer if not existed or not enough buffer size# NOTES: the adaptive routing configuration of the network **must be off**if _buffer is None or _buffer.group != group or _buffer.num_nvl_bytes < num_nvl_bytes or _buffer.num_rdma_bytes < num_rdma_bytes:_buffer = Buffer(group, num_nvl_bytes, num_rdma_bytes)return _bufferdef get_hidden_bytes(x: torch.Tensor) -> int:t = x[0] if isinstance(x, tuple) else xreturn t.size(1) * max(t.element_size(), 2)def dispatch_forward(x: Union[torch.Tensor, Tuple[torch.Tensor, torch.Tensor]],topk_idx: torch.Tensor, topk_weights: torch.Tensor,num_experts: int, previous_event: Optional[EventOverlap] = None) -> \Tuple[Union[torch.Tensor, Tuple[torch.Tensor, torch.Tensor]], torch.Tensor, torch.Tensor, List, Tuple, EventOverlap]:# NOTES: an optional `previous_event` means a CUDA event captured that you want to make it as a dependency# of the dispatch kernel, it may be useful with communication-computation overlap. For more information, please# refer to the docs of `Buffer.dispatch`global _buffer# Calculate layout before actual dispatchnum_tokens_per_rank, num_tokens_per_rdma_rank, num_tokens_per_expert, is_token_in_rank, previous_event = \_buffer.get_dispatch_layout(topk_idx, num_experts,previous_event=previous_event, async_finish=True,allocate_on_comm_stream=previous_event is not None)# Do MoE dispatch# NOTES: the CPU will wait for GPU's signal to arrive, so this is not compatible with CUDA graph# For more advanced usages, please refer to the docs of the `dispatch` functionrecv_x, recv_topk_idx, recv_topk_weights, num_recv_tokens_per_expert_list, handle, event = \_buffer.dispatch(x, topk_idx=topk_idx, topk_weights=topk_weights,num_tokens_per_rank=num_tokens_per_rank, num_tokens_per_rdma_rank=num_tokens_per_rdma_rank,is_token_in_rank=is_token_in_rank, num_tokens_per_expert=num_tokens_per_expert,previous_event=previous_event, async_finish=True,allocate_on_comm_stream=True)# For event management, please refer to the docs of the `EventOverlap` classreturn recv_x, recv_topk_idx, recv_topk_weights, num_recv_tokens_per_expert_list, handle, eventdef dispatch_backward(grad_recv_x: torch.Tensor, grad_recv_topk_weights: torch.Tensor, handle: Tuple) -> \Tuple[torch.Tensor, torch.Tensor, EventOverlap]:global _buffer# The backward process of MoE dispatch is actually a combine# For more advanced usages, please refer to the docs of the `combine` functioncombined_grad_x, combined_grad_recv_topk_weights, event = \_buffer.combine(grad_recv_x, handle, topk_weights=grad_recv_topk_weights, async_finish=True)# For event management, please refer to the docs of the `EventOverlap` classreturn combined_grad_x, combined_grad_recv_topk_weights, eventdef combine_forward(x: torch.Tensor, handle: Tuple, previous_event: Optional[EventOverlap] = None) -> \Tuple[torch.Tensor, EventOverlap]:global _buffer# Do MoE combine# For more advanced usages, please refer to the docs of the `combine` functioncombined_x, _, event = _buffer.combine(x, handle, async_finish=True, previous_event=previous_event,allocate_on_comm_stream=previous_event is not None)# For event management, please refer to the docs of the `EventOverlap` classreturn combined_x, eventdef combine_backward(grad_combined_x: Union[torch.Tensor, Tuple[torch.Tensor, torch.Tensor]],handle: Tuple, previous_event: Optional[EventOverlap] = None) -> \Tuple[Union[torch.Tensor, Tuple[torch.Tensor, torch.Tensor]], EventOverlap]:global _buffer# The backward process of MoE combine is actually a dispatch# For more advanced usages, please refer to the docs of the `combine` functiongrad_x, _, _, _, _, event = _buffer.dispatch(grad_combined_x, handle=handle, async_finish=True,previous_event=previous_event,allocate_on_comm_stream=previous_event is not None)# For event management, please refer to the docs of the `EventOverlap` classreturn grad_x, event

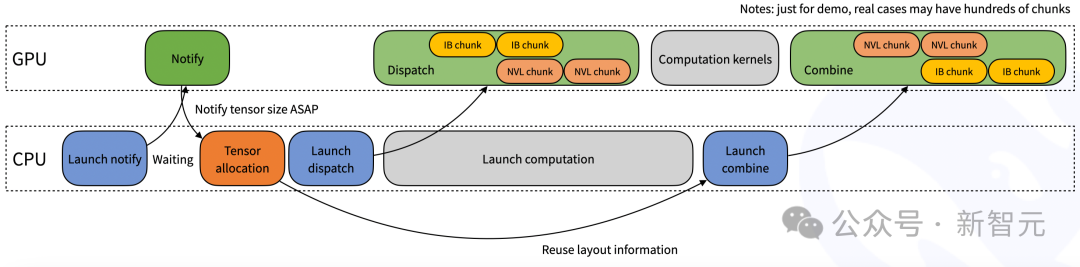

此外,在调度函数(dispatch function)内部,可能无法预知当前进程(rank)需要接收的具体token数量。

如下图所示,这种情况下系统会采用CPU同步等待机制,等待GPU返回接收完成的计数信号。

推理解码(Inference Decoding)应用示例

在模型推理的解码阶段,可以使用低延迟内核(专为实时推理优化)来提升性能。

具体使用方法请参考以下示例代码:

这段代码实现了一个低延迟模式的分布式混合专家(MoE)模型的分发与组合功能,支持PyTorch和CUDA图优化,适用于高效推理。

import torchimport torch.distributed as distfrom typing import Tuple, Optionalfrom deep_ep import Buffer# Communication buffer (will allocate at runtime)# NOTES: there is no SM control API for the low-latency kernels_buffer: Optional[Buffer] = None# You may call this function at the framework initializationdef get_buffer(group: dist.ProcessGroup, num_max_dispatch_tokens_per_rank: int, hidden: int, num_experts: int) -> Buffer:# NOTES: the low-latency mode will consume much more space than the normal mode# So we recommend that `num_max_dispatch_tokens_per_rank` (the actual batch size in the decoding engine) should be less than 256global _buffernum_rdma_bytes = Buffer.get_low_latency_rdma_size_hint(num_max_dispatch_tokens_per_rank, hidden, group.size(), num_experts)# Allocate a buffer if not existed or not enough buffer sizeif _buffer is None or _buffer.group != group or not _buffer.low_latency_mode or _buffer.num_rdma_bytes < num_rdma_bytes:# NOTES: for best performance, the QP number **must** be equal to the number of the local expertsassert num_experts % group.size() == 0_buffer = Buffer(group, 0, num_rdma_bytes, low_latency_mode=True, num_qps_per_rank=num_experts // group.size())return _bufferdef low_latency_dispatch(hidden_states: torch.Tensor, topk_idx: torch.Tensor, num_max_dispatch_tokens_per_rank: int, num_experts: int):global _buffer# Do MoE dispatch, compatible with CUDA graph (but you may restore some buffer status once you replay)recv_hidden_states, recv_expert_count, handle, event, hook = \_buffer.low_latency_dispatch(hidden_states, topk_idx, num_max_dispatch_tokens_per_rank, num_experts,async_finish=False, return_recv_hook=True)# NOTES: the actual tensor will not be received only if you call `hook()`,# it is useful for double-batch overlapping, but **without any SM occupation**# If you don't want to overlap, please set `return_recv_hook=False`# Later, you can use our GEMM library to do the computation with this specific formatreturn recv_hidden_states, recv_expert_count, handle, event, hookdef low_latency_combine(hidden_states: torch.Tensor,topk_idx: torch.Tensor, topk_weights: torch.Tensor, handle: Tuple):global _buffer# Do MoE combine, compatible with CUDA graph (but you may restore some buffer status once you replay)combined_hidden_states, event_overlap, hook = \_buffer.low_latency_combine(hidden_states, topk_idx, topk_weights, handle,async_finish=False, return_recv_hook=True)# NOTES: the same behavior as described in the dispatch kernelreturn combined_hidden_states, event_overlap, hook关于两个micro-batch的重叠处理机制,请参考下图。

团队实现的接收钩子(receiving hook)接口,允许RDMA网络通信在后台进行,这种设计不会占用GPU SM的计算资源。

需要注意的是,重叠部分的时间可以灵活调整,因为注意力计算(attention)、调度(dispatch)、混合专家(MoE)和组合(combine)这四个处理阶段的执行时间可能并不相同。

因此,可以根据具体的计算任务特点来调整各个阶段的配置参数,以获得最优性能。

(文:新智元)